From Zero to Multi-Modal Interactive Dialogue for Robots

Join us for a hands-on tutorial with the new ROS standard for human-robot interaction!

23 October 2022, from 09:00 to 17:00

Description

The new ROS standard REP-155 (aka ‘ROS4HRI’) is currently under public review. It defines a set of standard topics, conventions and tools to represent and reason about humans in a ROS environment.

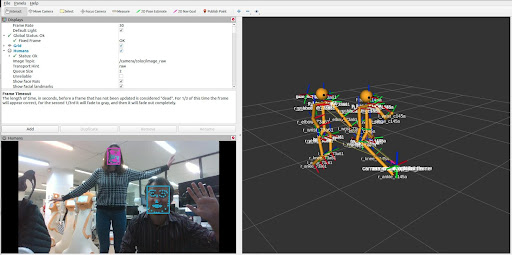

During this one-day IROS tutorial, we will present the ROS4HRI standard, and program a small simulated interactive system able to track and react to faces, skeletons and chats.

You will return home with a good understanding of the ROS4HRI spec, and hands-on experience with several key open-source, ROS4HRI-compatible, nodes for human-robot interaction (including mediapipe-based face detection, skeleton tracking, speech recognition, dialogue management, etc)

Follow for latest news

Programme

The tutorial will be a hands-on, highly interactive day. You will be programming a ‘mini-project’ using the ROS4HRI framework, with the close guidance of the workshop organisers.

The tutorial outline is as follows:

9:00-9:30

Welcome & introduction: the challenge of modelling humans, or why don’t we already have a ROS standard for HRI?

9:30-11:00

· Installation and introduction to OfficeBot, our interactive and multiplayer simulator for HRI https://github.com/severin-lemaignan/officebots;

· Initial exploration of the ROS4HRI API

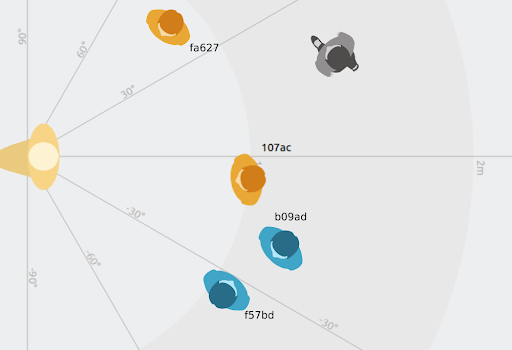

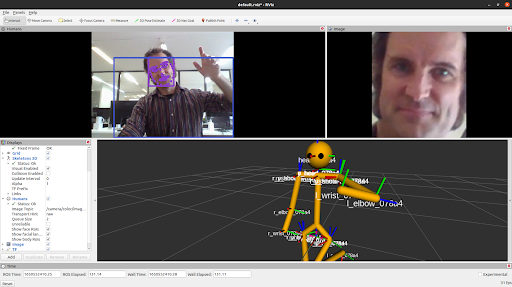

· Warm-up programming exercise: detect and track 3D whole-body humans around a robot

11:00 - 11:30

coffee break

11:30 - 12:15

Formal presentation of the ROS4HRI ROS standard REP-155

12:15-13:30

Part I of the mini-project: ‘Create a multi-modal chatbot from the ground up’: gaze and body tracking using mediapipe; simple gesture recognition

13:30-14:30

Lunch break

14:30-16:30

Part II of the mini-project: ‘Create a multi-modal chatbot from the ground up’: voice processing and speech recognition using vosk

16:30 - 17:00

coffee break

17:00 - 19:00

Part III of the mini-project: ‘Create a multi-modal chatbot from the ground up’: final integration with the rasa dialogue manager, and testing!

Registration

Registration to the workshop is done through the general IROS registration website:

Background

ROS is widely used in the context of human-robot interactions (HRI). However, to date, not a single effort (e.g. [1][2]) has been successful at coming up with broadly accepted interfaces and pipelines for that domain, as found in other parts of the ROS ecosystem (for manipulation or 2D navigation for instance). As a result, many different implementations of common tasks (skeleton tracking, face recognition, speech processing, etc) cohabit, and while they achieve similar goals, they are not generally compatible, hampering the code reusability, experiment replicability, and general sharing of knowledge.

The ROS4HRI framework has been recently introduced to address this issue. It introduces a consistent set of interfaces and conventions, standardised in the recently published ROS REP-155 "ROS for HRI". You can read it online here: https://github.com/ros-infrastructure/rep/pull/338

The REP-155 defines an adequate set of ROS messages and services to describe the software interactions relevant to the HRI domain, as well as a set of conventions (e.g. topics structure, tf frames) to expose human-related information. Since its publication, the standard has attracted a lot of attention, being for instance mentioned as “an exciting upcoming framework” during Dr. Leila Takayama’s HRI '22 keynote talk.

The objective of this tutorial is to give the robotics community – and in particular, the human-robot interaction community – an opportunity to gain a deep understanding of this framework, both in terms of understanding the ROS4HRI specification, and in terms of practical know-how. To this end, the tutorial will focus on a hands-on, project-based programming, and the participants will build, from scratch, a multi-modal interactive dialogue manager, including face and body back-channelling understanding, able to run on actual robots.

Target audience

The primary target audience of the workshop is practitioners in Human-Robot Interaction who are interested in modern and complex human perception pipelines for robotics. The tutorial will also be of relevance to people working on eg learning from demonstration, as we will cover human 3D whole-body tracking.

The tutorial will involve a lot of hands-on programming; we will use Python as our primary language. Good working knowledge of Python is therefore required.

In addition, working knowledge of ROS (Robot Operating System) is strongly recommended to make the most of the tutorial.

Pre-requisites

The tutorial will involve hands-on programming in Python and ROS. Good familiarity with both is required to make the most of the tutorial.

Please come with a laptop running Linux, with ROS Noetic installed. We will make use of the standard ROS visualisation tools like rviz.

If you are running ROS in a virtual machine: part of the tutorial involves running the 'OfficeBots' simulator which requires 3D acceleration. As such, make sure your virtual machine has 3D acceleration enabled.

Organizers

This project has received funding from the following European Union’s Horizon 2020 research and innovation programmes: H2020 grant agreement No 871245 (SPRING); Marie Skłodowska-Curie grant agreement No 955778 (PERSEO), Marie Skłodowska-Curie grant agreement No 801342 (Tecniospring INDUSTRY) and the Government of Catalonia's Agency for Business Competitiveness (ACCIÓ).